Overview of RAG

Imagine you want to integrate a chatbot into your website, one that only answers questions regarding your portfolio and the details you've shared about yourself. There are several methods to achieve this goal, and Retrieval-Augmented Generation (RAG) stands out as a particularly effective approach.

In the current era, Large Language Models (LLMs) like ChatGPT (from OpenAI) and Llama (from Meta) have gained significant attention, emerging as the most trending topics. These models, which are integral to Generative AI, help in generating text-based content on provided instructions, assisting tasks such as writing an email, coding, and text summarization. LLMs originated after the release of transformer neural network architectures.

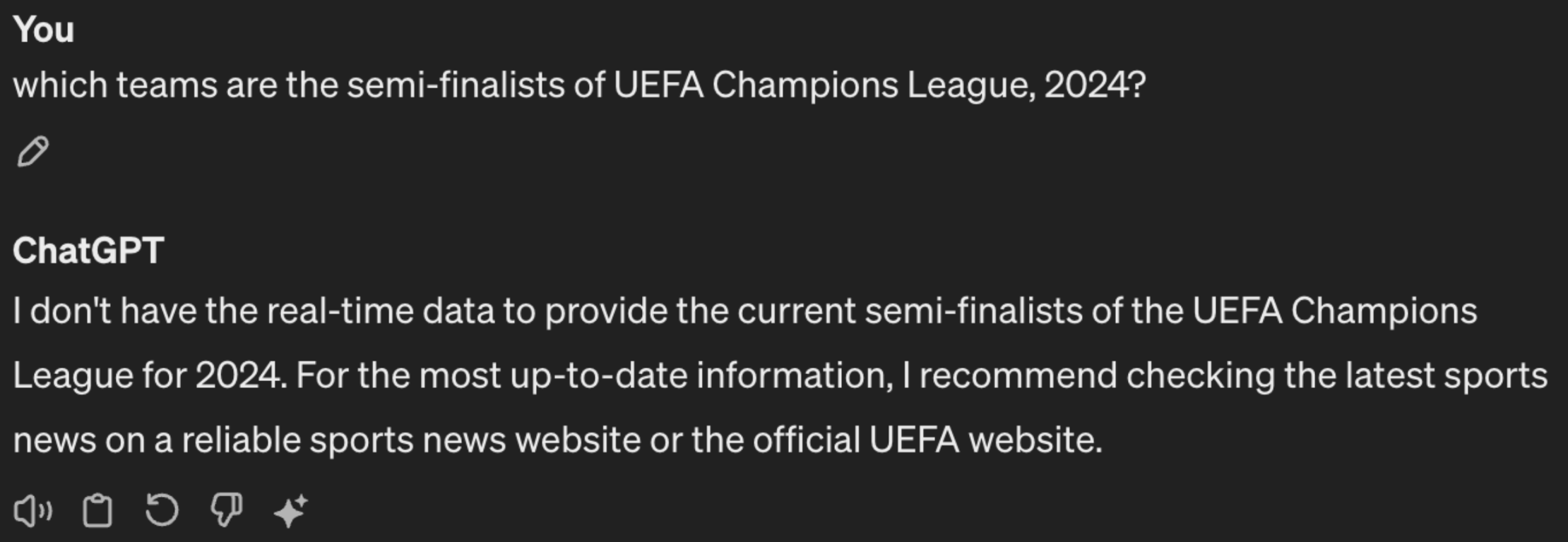

However, the primary challenge with LLMs is their lack of real-time updates and failure to refer to sources while generating answers, particularly affecting their reliability, for fact-based questions. Here's an example where ChatGPT lacks updated information.

The groundbreaking Retrieval-Augmented Generation (RAG) paper emerged in 2020 when Patrick Lewis was completing his doctorate in Natural Language Processing (NLP) at University College London while also being employed at Meta. The team aimed to enhance the knowledge capacity of LLMs and devised a benchmark to gauge their progress. When Lewis integrated the retrieval system from another Meta team into their project, the initial outcomes surpassed expectations.

Retrieval-Augmented Generation (RAG) is a framework/pipeline that is designed to improve the accuracy and relevance of Large Language Models (LLMs) for specific use cases. RAG uses LLMs to generate content based on provided prompts. Rather than solely depending on the training data of our LLM for content generation, we use the Retrieval-Augmented approach to incorporate source data or context into our queries or prompts. When a user asks questions on a particular thing, the LLM gets the relevant information from the provided data source in the Retrieval-Augmented section. Then, it leverages this information to generate contextually appropriate answers.

Therefore, the issue of LLM hallucination – which originates from excessive dependence on its training data – is removed by instructing the LLM to pay attention to the source data before responding to each question. This approach also enhances the LLM's confidence in its answers, enabling it to acknowledge when it lacks relevant context and reply with, “I don’t know” instead of providing incorrect answers to the user.

What are the applications of the RAG pipeline/framework?

Question answering: RAG performs extremely well in question-answering tasks when it requires factual information and verification. By paying attention to the provided data by the user, RAG frameworks can generate answers that are factually correct.

Document Summarization: In summarizing long documents, RAG can get key pieces of information from the document, creating short and comprehensive summaries.

Language Translation: By retrieving contextually relevant information, RAG can improve the quality of language translation, especially in cases involving specialized vocabulary.

Speech-to-text conversion: Using audio-to-text conversion models, the RAG pipeline can extract details from transcribed audio content and produce correct responses aligned with the query asked.

Introduction to Agents

LLMs have capabilities beyond simply predicting the next word. Their usecases extend past Question Answering or Chatting. LLMs can be tasked with complex activities like booking a flight ticket. They can make a plan by breaking the task into steps or sub-tasks, execute each step, monitor the outcomes, reason through successes or failures, and adapt the plan accordingly. They can also adjust their actions based on feedback. Such systems are known as Autonomous Agents.

These intelligent systems can think and act independently and are designed to execute specific tasks without constant human supervision. They use reasoning, which we can reinforce with prompts and instructions. Virtual assistants like Siri and Alexa are also types of agents that we control through voice commands.

What are the applications of AI Agents?

- Self-driving cars - AI agents help in real-time path planning and navigation, ensuring efficient and safe routes by analyzing traffic data, road conditions, and GPS signals. It can then automatically navigate through the route and reach the destination.

- Virtual assistants - AI virtual assistants help manage calendars, set reminders, and schedule meetings, making personal and professional life more organized. It can also use its environment to sense whether a task has been completed and manage the calendar accordingly.

- Healthcare, Finance, and Education - AI agents can analyze medical data and patient history to assist doctors in diagnosing diseases and suggesting treatment plans. They interpret medical images like X-rays, MRIs, and CT scans to detect abnormalities and assist radiologists in their assessments.

What are the potential drawbacks of RAG?

By limiting our answers to the information provided, (which is finite and not as large as the internet) we restrict the capability of the LLM.

This also suggests having caution when providing the knowledge base to the RAG components. The LLM assumes the provided information to be true and does not cross-verify it with potentially correct information available on the internet.

Fine-tuning and Retrieval-Augmented Generation (RAG) have entirely different roles in language processing. However, in some cases, fine-tuning offers superior accuracy and consistency because the model grasps nuances better, and it also retains prior knowledge.

In the upcoming modules, we will explore more about RAG pipelines in a detailed manner, and examine their mechanics while pointing out the fundamental differences between using a RAG pipeline and fine-tuning an LLM.