Have you ever considered creating a human-like clone that imitates a real person? Have you thought about developing an AI that replicates human traits, including a brain, perception, planning abilities, reflection, action-taking, and memory, just like a human being?

The process of how human-like agents or AI systems function, drawing parallels to human cognition and decision-making. Let me break it down and relate it to autonomous agents:

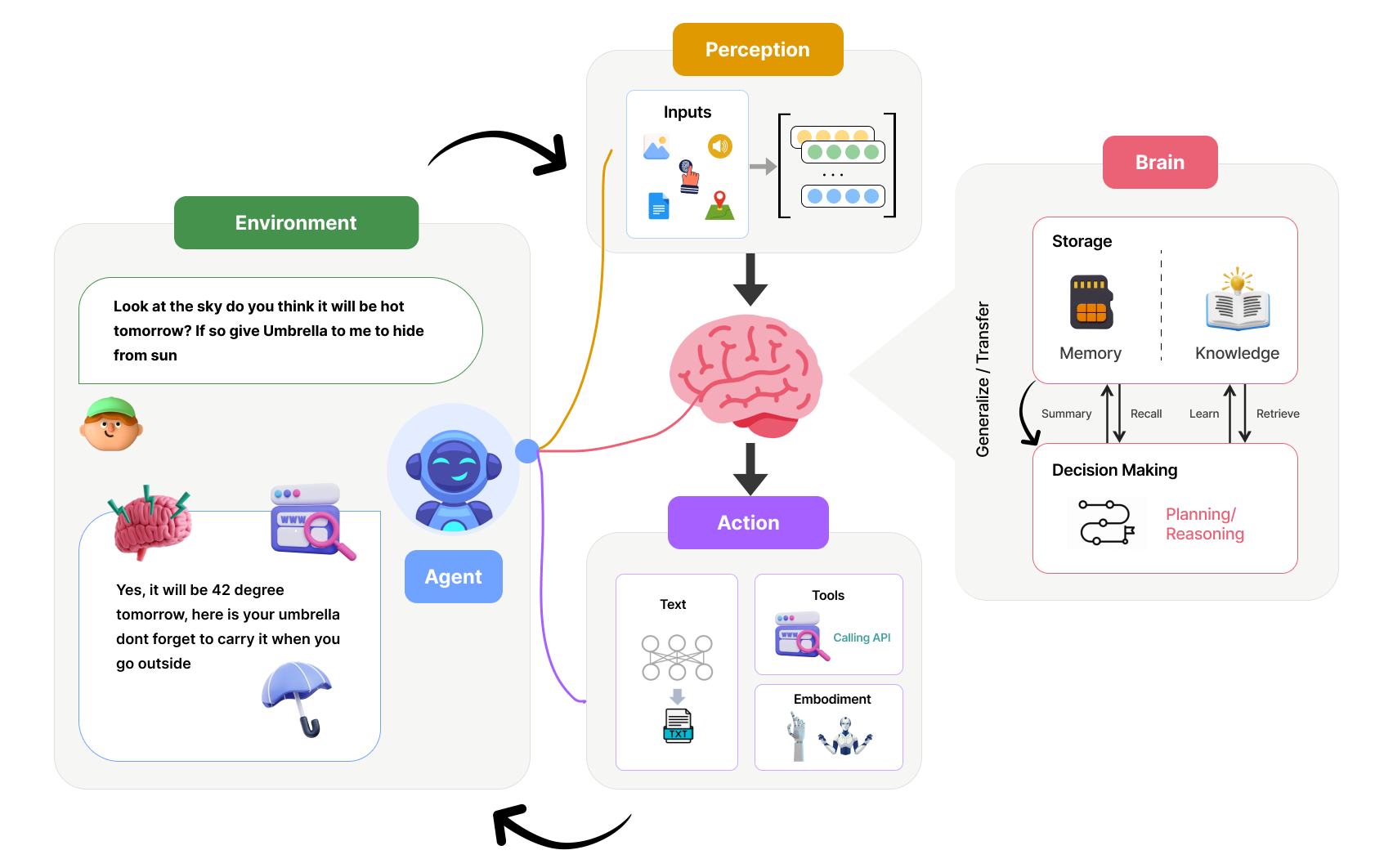

Environment:

The process starts with input from the environment. In this case, a human asks about the weather and requests an umbrella. For autonomous agents, this represents the context or situation they need to respond to.

Perception:

The agent perceives inputs from various sources (shown as icons for people, audio, documents, and location). This is similar to how autonomous agents gather data from sensors, databases, or user inputs.

Brain:

The central part of the system is the "brain," which processes information and makes decisions. It consists of:

- Storage: Divided into Memory (for short-term information) and Knowledge (for long-term information).

- Decision Making: Involves Planning and Reasoning. These components interact through processes like summarizing, recalling, learning, and retrieving information.

Action:

The brain's output leads to actions, which can be:

- Text: Generating language responses.

- Tools: Using external resources (like calling APIs).

- Embodiment: Physical actions or interactions.

For autonomous agents, this represents their output or response to the environment.

In this example, the agent perceives the question about weather, processes it using its knowledge and decision-making capabilities, and responds with a weather prediction and advice about using an umbrella.

Core Components to build Agentic Workflow

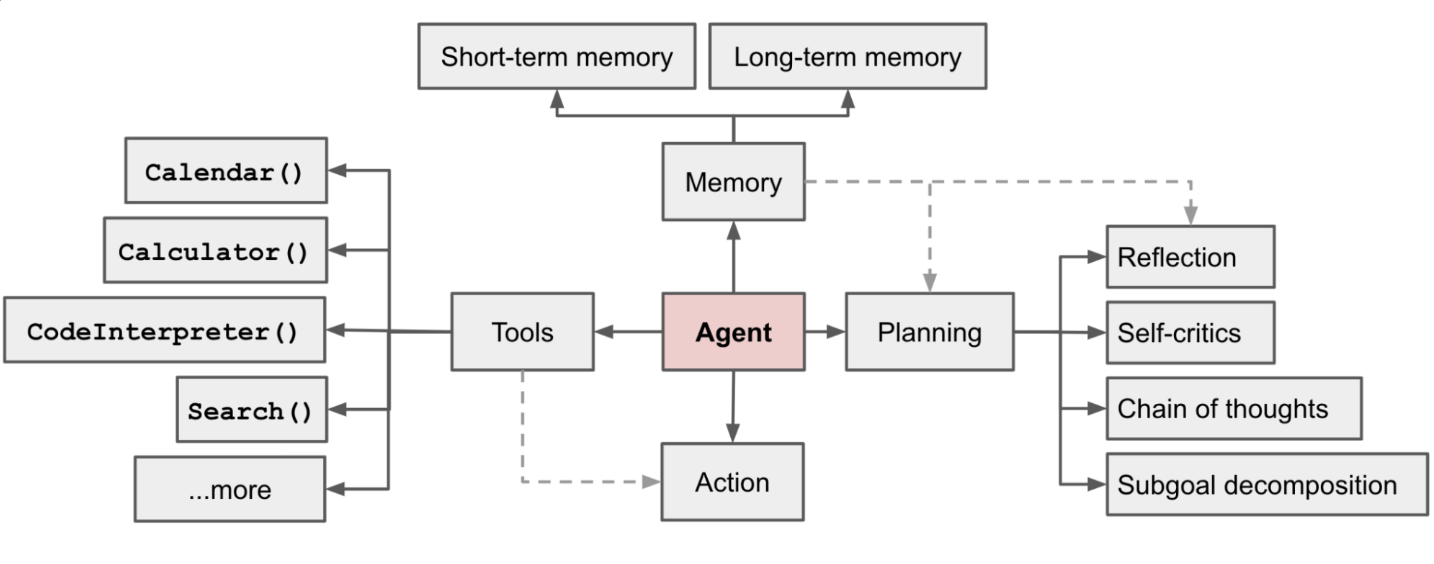

In a Large Language Model (LLM)-powered autonomous agent system, the LLM acts as the agent’s brain, supported by several key components:

Planning in Autonomous Agents

Planning is a critical component in the development of autonomous agents, involving the organization of actions to achieve specified goals. This process requires the agent to anticipate various outcomes and constraints, allowing it to navigate complex environments effectively. Advanced planning algorithms enable agents to devise strategies that optimize their performance, ensuring that the actions taken are not only efficient but also aligned with the overall objectives. This strategic foresight is essential for agents to handle real-world challenges autonomously.

Few of the prompt techniques used for Planning and Reasoning the LLM includes:

- Chain of Thoughts prompting

- Tree of Thoughts prompting

- REACT prompting

- Self-Reflexion prompting

- Chain of Thoughts Self Consistency prompting

- Language Agent Tree Search

Action Execution

Action execution is where the planned strategies come to life. It involves the real-time implementation of the planned steps, requiring the agent to interact dynamically with its environment. Effective action execution is characterized by adaptability and responsiveness, allowing the agent to modify its behavior based on real-time data and unforeseen events. This capability ensures that the agent can perform tasks autonomously and efficiently, responding to environmental changes with minimal human intervention.

In order to carry out the action we need the support of Tools to collect and process the data.

Tools

Tools are essential resources that enhance the capabilities of autonomous agents, providing them with additional functionalities to perform tasks effectively. These can include software tools, databases, sensors, and computational algorithms that aid in data processing, decision-making, and action execution. Integrating tools into the agent’s workflow allows for more sophisticated behaviors and improved performance. By leveraging these external resources, autonomous agents can handle more complex tasks, process large amounts of data, and interact with their environment in more advanced ways.

Memory

Memory plays a pivotal role in the functionality of autonomous agents, providing a repository for storing and retrieving information. This capability allows agents to learn from past experiences, making informed decisions and adapting to new situations. By maintaining a history of interactions and outcomes, memory enables continuous improvement in the agent's performance. Long-term memory systems enhance the agent's ability to handle complex tasks, ensuring that past experiences inform future actions, leading to more accurate and effective behaviors. Finally Agents, that performs these tasks.

The Role of Agents

Agents are the core components of autonomous systems, designed to perceive their environment, make decisions, and perform actions autonomously. These entities are equipped with sensors and effectors to interact with their surroundings, processing data to achieve their goals. The design and implementation of agents involve integrating various functionalities, such as planning, action-execution, and memory, to create a cohesive and efficient system. Effective agent design ensures that the system operates seamlessly, adapting to environmental changes and achieving desired outcomes.

Reference: https://lilianweng.github.io/posts/2023-06-23-agent/